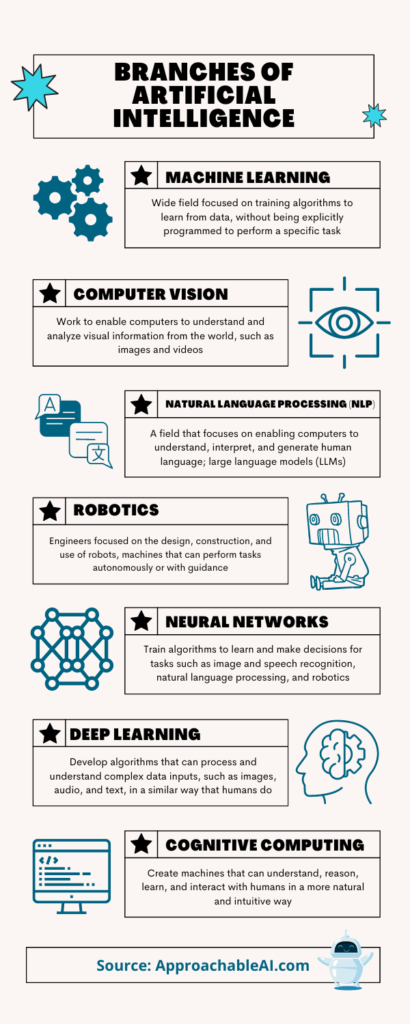

With all the buzz around generative AI, it’s easy to forget that Artificial Intelligence is actually a massive field with many individual branches within computer science.

As AI becomes a bigger part of our everyday lives, it’s helpful to understand how each of these disciplines has the potential to fundamentally change the way we interact with technology.

In this post, we outline every branch of AI we can get our hands on and explain what each means.

We hope this is a valuable resource for anyone working on a research project, exploring career ideas, or expanding their knowledge of the AI space!

Branches of AI Infographic

Machine Learning

The first type of AI we will cover is machine learning. In short, machine learning involves teaching computers to learn from data in order to make predictions or decisions.

Imagine you’re a real estate agent with a massive database of real estate listings.

You could spend hours going through each property by hand.

But with machine learning, you can train a model to automatically categorize each property based on its location, size, number of bedrooms, etc.

The model can quickly sort properties that fit a particular buyer’s criteria, saving you countless hours of clicking through Zillow.

Supervised Learning

Supervised learning is a process where a machine learning model is trained on labeled data.

Labeled data is any image, text, or file tagged with a description.

The model is taught to recognize patterns in the data. It is supervised because the data has already been categorized, and the model is guided toward the correct answer.

Think of supervised learning as a teacher-student relationship. The teacher has labeled data (answers), and the student is trying to learn the relationship between the input and output data. In this case, the teacher is the labeled data, and the student is the model trained on that data.

Two of the most common types of supervised learning are regression and classification.

Regression

Regression is supervised learning that predicts a continuous output, like a stock price, weather, or sales revenue.

In the case of weather forecasting, the model considers things like temperature, satellite data, and radar readings to learn patterns and make predictions on future temperatures.

The output is continuous, meaning it can predict a range of temperatures rather than just a specific temperature.

Classification

Another form of supervised learning is classification, which predicts a categorical output.

It’s used in applications like image recognition, spam detection, and sentiment analysis.

Classification models are trained on data with a true or false categorical output (i.e., spam or not spam and positive or negative sentiment).

Classification is like sorting fruit. Imagine you have a basket of apples and oranges and want to sort them into two separate baskets. That’s exactly what a classification model does – it takes in data and, based on its learning, categorizes the data into separate classes.

Unsupervised Learning

Unsupervised learning is a process where the model is not trained on labeled data.

Instead, the model must discover patterns on its own. The most common type of unsupervised learning is clustering.

Unsupervised learning is like exploring a new city without a map.

The model must discover patterns on its own without a teacher to guide it.

Think of finding your way around New York without a map or GPS. You must pay close attention to your surroundings to determine where you are and how to get where you want to go.

Clustering

Clustering is one type of unsupervised learning that groups data based on similarity. Data scientists use it in customer segmentation and anomaly detection by dividing data into groups or clusters based on their similarities.

One way to think of clustering is like grouping your friends. Imagine you have a list of 100 people and want to divide them into groups based on common characteristics such as age, interests, and occupation.

Clustering algorithms combine various features to create entirely new groups or ways of organizing your friends that are not immediately apparent.

Anomaly detection

Anomaly detection is a type of unsupervised learning that identifies data that deviates from the normal pattern.

This is important in fraud detection and network intrusion detection.

Anomaly detection algorithms analyze data and identify divergent points from most data.

The algorithm looks for data points that stand out as significantly different from the rest of the data.

Reinforcement Learning

Reinforcement learning relies on interacting with its environment to learn how to optimally perform a task.

The model learns by receiving rewards or punishments for its actions.

Reinforcement learning is perfect for optimizing game-playing or teaching a robot.

The goal is to find the optimal way to win the game or complete the task.

Deep Reinforcement Learning

Deep reinforcement learning is another type of reinforcement learning that uses deep neural networks to make predictions.

It is currently used in the development of autonomous vehicles.

In this case, the neural network is trained to make decisions such as selecting the safest route to reach its destination or when to apply defensive driving tactics.

The network is trained by simulating various scenarios and adjusting its decision-making based on the rewards it receives for its actions. This helps the vehicle’s AI system learn and make better decisions in real-world situations.

Computer Vision

This branch of AI focuses on the ability of machines to understand and analyze images and videos.

Computer vision is like giving eyes to a machine. It enables machines to interpret and understand the visual world similarly to humans.

Techniques such as image classification, object detection, image segmentation, and face recognition are key components of computer vision.

Many businesses ranging from security and surveillance to healthcare and entertainment, use computer vision in their products.

Image classification

Image classification assigns a label to an image based on its contents.

It is the foundation of computer vision, where the goal is to classify images into predefined categories, such as dogs, cats, and cars.

This is done by training a model on a large dataset of labeled images.

The model then uses this information to make predictions on brand-new images.

Imagine you have a library of 1,000 books, and you want to organize them into fiction and non-fiction. Image classification works in a similar way by placing images into different classes.

Object detection

This field takes image classification a step further. It not only classifies the image but also identifies the location of objects within the image.

Object detection is like finding a specific book in a library.

Imagine you’re searching for a specific book in a library. But instead of just looking at the titles on the shelves, you can actually see each book.

Image segmentation

Image segmentation is a technique that splits an image into multiple parts. Each area corresponds to a different object or part of the image.

This is useful in applications where isolating and analyzing specific objects in an image is necessary. For example, radiologists use medical image analysis to diagnose diseases.

Image segmentation is achieved through techniques such as contour detection, edge detection, and region-based methods.

Imagine you’re trying to navigate through a dense forest.

With image segmentation, computer vision can help you differentiate between the trees, bushes, and other objects in the environment to find the best path forward.

Facial recognition

Face recognition is a subfield of computer vision that identifies and verifies individuals based on their facial features.

Deep learning algorithms interpret a person’s eyes, nose, and mouth to create a facial signature.

You see this technology used in unlocking your phone with your face. It is also used when verifying your identity to access certain buildings or in social media to automatically tag your friends in photos.

Natural Language Processing (NLP)

NLP is a subfield of AI that covers everything in the realm of computers and human-language interaction. NLP technologies aim to make computers understand, interpret, and generate highly accurate human language.

One key component of NLP is the concept of fuzzy logic. It is a mathematical system that deals with approximate reasoning rather than binary true and false outcomes. This allows for more nuanced and flexible decision-making.

Additionally, large language models (LLMs) like GPT-3 or ChatGPT can perform various NLP tasks that we will discuss below.

Text Classification

Text classification is an NLP task that categorizes text data into predefined classes.

This is useful for email filtering, news tagging, and sentiment analysis.

The model is trained on labeled text data, learning to recognize patterns that distinguish each class.

For instance, text classification can automatically index news articles into politics, sports, technology, and entertainment.

Sentiment Analysis

This field specializes in analyzing the emotion expressed in a piece of text.

Sentiment analysis models can determine whether a text expresses a positive, negative, or neutral sentiment. Further, these models can be trained to identify more complex emotions like confusion, sarcasm, and humor.

Some popular use cases for these tools are customer feedback analysis, brand monitoring, and opinion mining.

Named Entity Recognition (NER)

NER is an NLP task that identifies named entities in text, such as people, organizations, and locations.

Think of NER as a detective who can pick out the key players or locations in a story.

NER can perform information extraction and text summarization. The NER algorithm scans the text and recognizes named entities, labeling them with the appropriate entity type.

Part-of-Speech Tagging (POS)

POS is an NLP task that assigns a part-of-speech tag, such as a noun or verb, to each word in a sentence.

POS is helpful in text classification and sentiment analysis.

The POS algorithm scans the text and labels each word with its corresponding part-of-speech tag, providing a deeper understanding of the sentence structure.

Robotics

Robotics encompasses an entire branch of engineering. Everything from the conceptualization, construction, and operation process falls within this field.

We have all seen robots on TV and in movies.

But modern robotics truly has the potential to revolutionize how we live and work, whether it’s delivery, construction, or security.

Autonomous Robots

Autonomous robots are robots that can perform tasks without human intervention.

They can make decisions and perform actions based on their environment and objectives.

For example, an autonomous robot can explore a disaster-stricken area. This is a much safer way to search for survivors without risking the lives of human rescuers.

Humanoid Robotics

Humanoid robotics have a human-like appearance or form.

Engineers design these robots to perform human tasks like walking, speaking, and moving objects.

Companies like Boston Dynamics design robots with human-like (or dog-like) bodies built to perform sophisticated movements.

Neural Networks

Neural networks are a field of machine learning inspired by the architecture of the human brain.

These networks consist of interconnected nodes, or neurons, that work together to solve complex problems.

Neural networks can perform image/speech recognition and advanced decision-making.

Convolutional Neural Networks (CNN)

Convolutional neural networks (CNNs) are a type of neural network specifically designed for image recognition.

They use a process called convolution. This is where the network learns the features of an image by scanning it with a set of filters.

CNNs can achieve state-of-the-art performance on a variety of image recognition tasks.

For example, a trained CNN can identify and classify different types of fruits in an image. The network analyzes each section of the image, looking for key features, such as shape and color, to determine if a piece of fruit is a banana, apple, or orange.

Recurrent Neural Networks (RNN)

Recurrent neural networks (RNNs) are neural networks that process data sequences, such as time series data or text.

RNNs can remember information from previous time steps, allowing them to learn patterns and make predictions based on previous inputs. This is great for language translation and speech recognition.

For example, you can train an RNN on a large corpus of movie scripts to generate new movie scripts. The network would analyze the pattern of words and phrases in the originals and use that information to generate new, coherent scripts similar in style and tone.

Deep Learning

Deep learning is another subcategory of machine learning. It uses deep neural networks to perform complex tasks.

Deep learning has been successful in several applications. These use cases include natural language processing, which we covered earlier, and tools that encode speech and images.

Deep learning models analyze vast datasets of medical images to automatically identify and diagnose various cancers.

The network analyzes thousands of images, looking for patterns and features indicative of each type of cancer. This information is a goldmine to help doctors accurately diagnose new, unseen images.

Generative Adversarial Networks (GANs)

These networks consist of two neural networks: a generator network that creates new data samples and a discriminator network that attempts to distinguish the artificially generated samples from the real samples.

GANs can perform image synthesis and data augmentation.

One interesting example of GAN is the creation of realistic photographs of faces that don’t actually exist.

The generator creates a face while the discriminator evaluates whether it looks realistic. The generator then uses the feedback from the discriminator to refine its creation and make it look more realistic.

This process continues back and forth between the two networks until the generated face is nearly impossible to distinguish from a real one.

GANs can create AI avatars, realistic AI-generated voices, or generate training data for other machine learning algorithms.

Transfer Learning

This subfield of deep learning focuses on fine-tuning pre-trained neural networks for new tasks rather than training the networks from scratch.

This approach can significantly reduce the data and computation required for training.

For example, a pre-trained network can identify cats and dogs in images. The network is then fine-tuned on a new, smaller dataset of images specific to a particular dog breed.

Fine-tuning allows the network to recognize patterns and features specific to that breed of dog and thus improve its accuracy in identifying that breed.

Conclusion

From image recognition to text classification, from robotics to deep learning, the applications of AI are seemingly endless.

While we couldn’t cover every possible field in AI, we hope this guide helps you understand today’s enormous potential.

The field of AI is ripe with opportunities for growth, learning, and discovery, whether you’re an experienced coder or a curious beginner.